At Replay, we’re building a true time-travel debugger for JavaScript. Our technology records everything that happens in a browser, so you can use it to debug any page regardless of what frameworks or libraries were used to build it. That said, framework-specific devtools are a valuable part of the debugging experience.

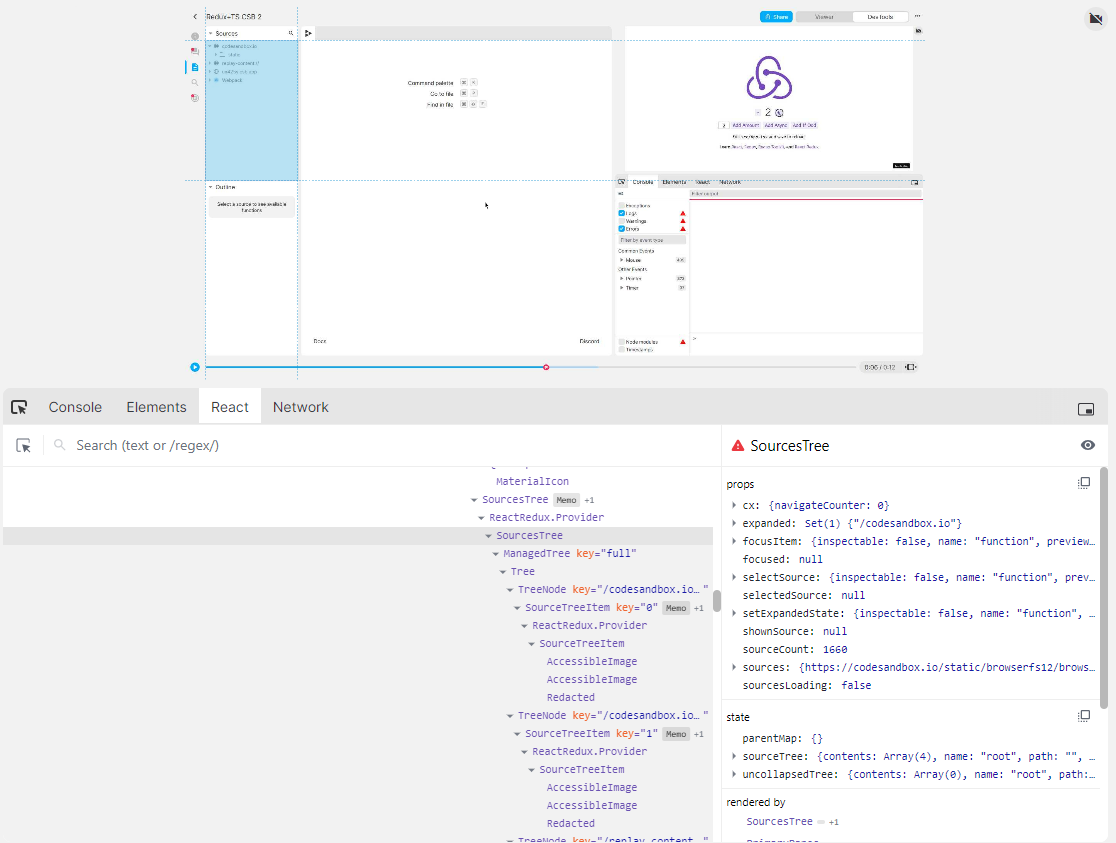

One of React’s greatest strengths is the ability to inspect your application with the React DevTools (“RDT”) browser extension. The extension lets developers view the current component tree structure, and inspect the props and state of a given component instance. Normally, the React DevTools are used to interact with a live page in the browser, but we had previously integrated the React DevTools as a component in our app, with the necessary data being captured during the initial recording process:

The magic of time-travel debugging is that it lets you tackle problems in new ways that you’d never thought of before. Not only can we inspect the behavior of a program at any point in time, we can start to think of the original runtime behavior and application execution as data that can be queried to give us insight into what happened, and we can use that data to come up with novel techniques and solutions to problems.

So… what if we could capture the data needed to support the React DevTools while replaying the recording? Not only would this make our recording process faster, it would also help unlock future enhanced debugging capabilities like React Component Stacks.

This is the story of how the React DevTools work, how we originally implemented support for capturing React DevTools data during recording, and how we rebuilt that support using “Replay Routines” - a new and novel technique based on runtime analysis.

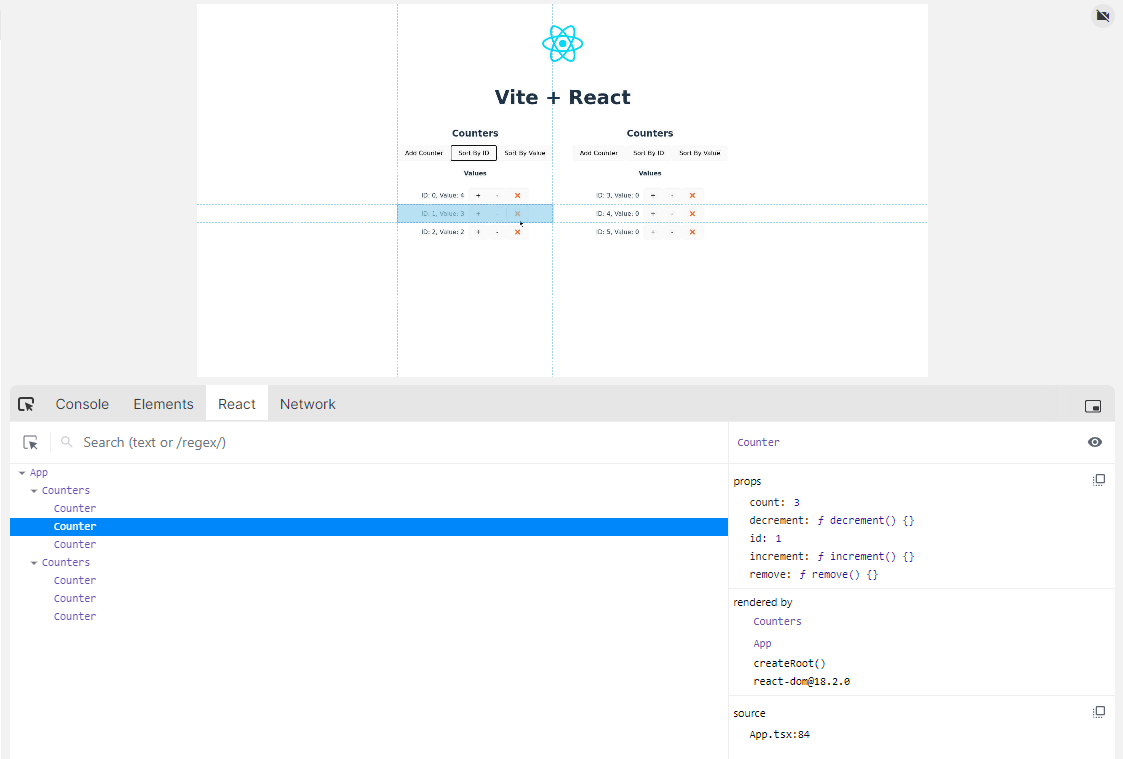

TL;DR: we rebuilt Replay’s React DevTools support using our time-travel debugging API, and it works!

Background

Replay’s recording capability is built into our custom forks of the Firefox and Chrome browsers and Node runtime. Firefox has been our primary recording browser thus far, but in Q4 2022 we started improving the Chrome fork to reach feature parity with Firefox. One of the missing features in Chrome was React DevTools support.

It’s worth stepping back and recapping how we initially implemented React (and initial Redux) DevTools support in our Firefox fork. That also requires a detour to talk about how the React DevTools themselves are implemented.

How Do the React DevTools Work?

- the “backend” logic that is injected into every web page, talks to React as it renders, and collects information about the changes to the React component tree

- The RDT UI components that normally are displayed inside of the browser’s own devtools. The RDT UI layer parses the tree operation data, formats it, and renders the tree of component names.

Every time you open a web page, the RDT extension “backend” logic is injected into the page, and adds a global “hook” object to

window.__REACT_DEVTOOLS_GLOBAL_HOOK__. (Despite the “hook” name clash, this has nothing to do with hooks in components like useState or useEffect.) Later, when the React renderer initializes itself, it looks for the global hook, and adds itself to the hook’s list of renderers in the page.Every time the React renderer finishes a render pass and “commits” the changes to update the component tree, it calls

hook.onCommitFiberRoot(renderID, fiberRoot). The RDT extension “backend” logic then traverses the “fiber” objects that make up the component tree, and determines which components were added, updated, or re-ordered. Similarly, every time a component is removed, React calls hook.onCommitFiberUnmount(rendererID, fiber).Internally, React tracks IDs for each node, and describes modifications to the tree in terms of adding, moving, and rearranging nodes by ID. The RDT logic then creates an “operations” array that describes the changes to the component tree structure, and encodes all of the changes as numbers. This optimizes the size of the data that has to be sent across a

window.postMessage() bridge to the RDT extension UI layer.As an example, say we have a component tree that looks like this:

javascript<App> // ID: 24 <Counters> // ID: 42 <Counter> // ID: 111 <Counter> // ID: 173 <Counter> // ID: 246

If we ran some logic that re-sorted the counters based on their current values, React would describe this by listing the new order of the children with their IDs. For example, the operations array

[1,19,0,3,42,3,111,173,246] translates into:plain textoperations for renderer:1 and root:19 Re-order node 42 children 111,173,246

The RDT UI receives the operations array, parses the operations data, and updates its internal data structures to reflect the new component tree structure, including the names of each component.

This operations data only describes the structure of the component tree - it doesn’t include any additional information about hooks, props, or state.

When you click on a component entry, the RDT UI then needs to ask the “backend” logic in the page for information about that component instance. On click, the UI layer sends a request for information across the bridge with

window.postMessage(). The backend logic looks up the appropriate fiber object for that component, formats a description of its data, and sends it back to the UI layer for display in the RDT sidebar.This means that the backend logic has to be installed and available in the page, so that the extension UI layer can request per-component data on demand as you click on entries in the extension’s component tree.

Replay’s React DevTools Support in Firefox

We initially added RDT support to our Firefox fork in mid-2021.

The FF implementation takes the entire pre-built RDT extension “backend” bundle file, pastes it into our

gecko-dev repo, and modifies the browser’s internals to automatically import and load that backend logic into every page you open with FF. This means the window.__REACT_DEVTOOLS_GLOBAL_HOOK__ will exist in every page that’s being recorded, and if the page uses React, it will attach itself to the global hook object and track component tree updates.However, we applied hand-edits to the RDT backend extension bundle to modify its behavior. In particular, we overrode the line where it called

window.postMessage() to send the data to the extension UI. Instead, we save the tree operations data to our database. Replay’s recording data format allows having additional metadata entries called “annotations” to be added later. We stringify the operations arrays and save them in an annotations object, like:json{ point: "24663410078278296235742819197649521", time: 10696, kind: "react-devtools-bridge", contents: '{"event":"operations","payload":[1,19,0,3,42,3,111,173,246]}', }

When you open up a recording of a React app, the UI layer asks the backend for all annotations of kind

"react-devtools-bridge". Any time you jump to a pause point in the recording, it renders the RDT UI components, parses the operations arrays up to that point in time, and feeds those into the RDT UI layer so it has a correct copy of the component tree based on those operations.When you click on a component entry, the RDT UI layer has to ask the backend logic for data on that component. Since Replay’s time-traveling has real browser process forks in the cloud for each pause point, we can intercept that request message, evaluate it in the paused browser instance, and extract the function result that the backend logic in the paused browser returned. (This may sound like magic, and to some extent it is! But it’s also a logical outgrowth of what you can do with time-travel debugging.)

Earlier this year, I spent a few days implementing an initial proof of concept for Redux DevTools support in Firefox as well. I used the same basic approach: build the Redux DevTools extension JS bundle, paste it into our

gecko-dev repo, load that into every page being recorded, hijack the data that would have been sent to the extension UI, and save it as annotations for later display while debugging. It worked, but also turned out to be capturing too much information, so we had to turn off that feature until further notice.Replay’s Recording Analysis APIs

The final piece of background is Replay’s analysis APIs.

Replay’s backend offers an incredibly powerful set of APIs for interacting with the recording, mostly modeled after the Chrome DevTools protocol.

This API is publicly documented at replay.io/protocol, and contains dozens of methods for analyzing the behavior of the recorded program. You can retrieve source file contents, read stack frames, inspect the contents of variables, fetch DOM node information, retrieve console messages, and much more. A typical snippet of analysis logic might look like:

typescript// Snippet: introspect variables in a given stack frame to look // for objects that appear to be React Fiber instances: const pause = await client.Session.createPause({ point }, sessionId) const secondFrame = pause.data.frames![1] const secondFrameSourceId = secondFrame.location[secondFrame.location.length - 2].sourceId const frameSource = sources.find( source => source.sourceId == secondFrameSourceId )! if (!frameSource.url!.includes('react-dom')) { console.log('Not a React component render') return } // Get local scope bindings const localScopeId = secondFrame.scopeChain[0] const scope = await client.Pause.getScope( { scope: localScopeId }, sessionId, pause.pauseId ) // Loop over the bindings to find the React fiber node for (const obj of scope.data.scopes![0].bindings!) { if (obj.object) { const preview = await client.Pause.getObjectPreview( { object: obj.object }, sessionId, pause.pauseId ) // more variable introspection omitted } }

Replay’s debugger client uses this API for all of our debugging functionality, but the API can be used by other programs as well. We have an example repo at Protocol Examples that demonstrates some other small scripts that leverage the protocol API to perform introspection on recordings. One example retrieves line hit count information and generates HTML code coverage reports for the source files, and another digs into the guts of React’s internal fiber objects to determine if a component is mounting or re-rendering.

Replay’s debugger client is incredibly useful, but we’re still barely scratching the surface of the capabilities offered by the protocol API.

Using “Routines” for Recording Analysis

In early 2022, we started discussing the idea of an alternate approach for React DevTools support. What if we used our protocol API to introspect the contents of the React renderer in a recording and capture the necessary operations data?

One reason for possibly changing is that the version of the RDT backend logic in a recording is fixed to whatever version was used in that Firefox build. There was one major change to the RDT “operations” data format in the last year, and we had to start checking that in our client code and load the matching version of the RDT UI components that know how to process the appropriate data format. It would be nice to avoid that scenario in the future in case there are any other RDT data format changes. Also, removing the in-browser extension logic means less overhead during the actual recording process.

Brian Hackett, Replay’s CTO, did some initial proof of concept work to try parsing the RDT operations data using our analysis APIs, and proved we could pull out some of the info.

In late 2022, we revisited this idea, and Brian Hackett came up with a new concept called “analysis routines”. The idea is to break up the work of performing recording analysis into two parts: marking interesting timestamps while recording, and then running the analysis while replaying to extract the relevant data from those recording timestamps.

Brian built out scaffolding in our backend repo for writing “routines”, and running them after a recording has been uploaded and opened. He then ported his initial RDT analysis POC code over to run inside of one of these “routines”, and set up a script to enable executing a routine locally against a given recording for development work.

Browser Integration, Stubs, and Annotation Timestamps

In order to identify “interesting” timestamps in a recording, we need to create and save initial annotation entries during the actual recording process. In the case of React, we care about when the React renderer tried to connect to the global “hook” object during initialization, and each time the renderer committed updates to the component tree.

To track these, we modified our Chromium fork to add some small “stub” functions to each page. We created functions that mimic the signatures of the real RDT hook object methods, but instead of doing real React processing, just save annotations with a timestamp, marking that point in time for future analysis processing:

javascript// Some of our custom Chromium "stub" logic: const stubHook = { supportsFiber: true, inject, onCommitFiberUnmount, onCommitFiberRoot, onPostCommitFiberRoot, renderers: new Map() } window.__REACT_DEVTOOLS_SAVED_RENDERERS__ = [] Object.defineProperty(window, '__REACT_DEVTOOLS_GLOBAL_HOOK__', { configurable: true, enumerable: false, get() { return stubHook } }) let uidCounter = 0 function inject(renderer) { const id = ++uidCounter window.__RECORD_REPLAY_ANNOTATION_HOOK__('react-devtools-hook', 'inject') window.__REACT_DEVTOOLS_SAVED_RENDERERS__.push(renderer) return id } function onCommitFiberUnmount(rendererID, fiber) { window.__RECORD_REPLAY_ANNOTATION_HOOK__( 'react-devtools-hook', 'commit-fiber-unmount' ) }

With these stubs injected into each page, React thinks it’s talking to the real RDT extension global hook object during the recording, and will try to call these functions as it updates the component tree.

You’ll note that this logic is pretty hacky and incredibly specific to the internals of the RDT implementation :) We don’t have a good way to generalize this yet, but it’s a pattern that ought to be repeatable for future integration with other framework devtools.

Writing Annotations for Processing Recordings

I took over Brian Hackett’s proof of concept for an RDT “analysis routine”, and began exploring the code to understand the architecture and usage for routines.

Our backend repo is internal and proprietary, but at a high level:

- Each “routine” is written as a separate file that exports an object with

{name, version, runRoutine, shouldRun}. Those entries get added to a global list of available routines.

- As a recording is being loaded for debugging, the backend checks each routine entry to see if it should process this recording, based on the recorded browser type and version

- Each routine is spun off as a background task for execution. The routine can use any of our protocol analysis APIs to introspect the recording, and saves new annotations. Those annotations are cached, and will be used on future debugging sessions for this recording.

A stripped-down version of a typical routine might look like this:

typescriptinterface ExtractedData { point: ExecutionPoint time: number someRoutineSpecificData: number } async function runReactDevtoolsRoutine(routine: Routine, cx: Context) { // Collect the annotations that were created during the recording. // These mark the "interesting" timestamps we want to process. const annotations = await routine.getAnnotations( 'some-annotation-from-our-browser-fork', cx ) // For each of those timestamps, do further processing using // Replay's protocol API. The exact introspection steps // and returned data will be specific to each routine. const allPointResults: ExtractedData[] = await Promise.all( annotations.map(async annotation => { const { point, time, contents } = annotation const { message } = JSON.parse(contents) const evaluationArgs: EvaluationArgs = { routine, point, cx } const someRoutineSpecificData = await retrieveUsefulDataForTimestamp( evaluationArgs, message ) return { point, time, someRoutineSpecificData } }) ) // Save the final annotations with the processed data, // so that the Replay client can load these and use for display. for (const { point, time, someRoutineSpecificData } of allPointResults) { const annotation: Annotation = { point, time, kind: 'some-final-annotation-for-routine', contents: JSON.stringify({ event: 'data', payload: someRoutineSpecificData }) } routine.addAnnotation(annotation) } } // Define the routine entry so that the backend can run it as needed export const ExampleRoutine: RoutineSpec = { name: 'Example', version: 2, runRoutine: runExampleRoutine, shouldRun: ({ runtime, date, platform }) => runtime == 'chromium' }

Evaluating Code in Recording Pauses

Replay’s time-travel backend has the ability to pause a browser instance at any timestamped “execution point” in the recording. Conceptually, you can think of an execution point as being similar to an incrementing counter of JS lines of code executed by the interpreter.

When you call the

Session.createPause protocol method, Replay’s backend forks off another copy of the browser, runs it to that point in time, and then halts. From there, you effectively have a real running browser instance up in the cloud that you can interact with remotely.All of the key protocol methods require that you first create a pause and include the generated

pauseId in the API call, so that requests for things like DOM node data or JS variable contents are evaluated at that point and scope.However, a pause is conceptually mutable. You can evaluate arbitrary chunks of JS code within the browser at that pause point, either inside of a specific stack frame, or in the global scope. This is done by sending a string containing JS code to the backend, which then does the equivalent of

const result = eval(jsCode) in that scope. Evaluation results are returned from the backend, so this can be used for reading data. However, it can also be used to mutate the state of the JS environment as well!For example, say that the original recorded app had

let obj = {a: 1} in the function we’re paused at. If we evaluated the string "obj.a = 2" in that pause point via the protocol, then it really does mutate obj.a in that paused browser instance! If we then retrieved the contents of obj.a, we’d see 2 instead of 1.Replay’s backend actually remembers any evaluation commands for a given pause point. If it happens to unload that region of the recording from memory and then reload it, the same evaluations will be re-applied.

This means that each unique pause acts like a mutable scratchpad, and can have its in-memory contents changed by evaluating strings of JS code. Additionally, you can create many different unique pause instances for a given execution point, with each a separate encapsulated environment from the others.

React DevTools Routine Implementation

I spent a couple days spelunking through the guts of the actual React DevTools source to understand the general data flow and architecture. Once I had a handle on that, I was able to sketch out a tentative plan for how this all might work:

- Fetch the original in-recording annotations that marked all the timestamps where React had committed an update to the component tree and tried to update the DevTools, as captured by our stub functions inside Chromium

- For each commit timestamp, use pause code evaluation to:

- Delete the stub global “hook” object we’d added to Chromium

- Inject the entire React DevTools global hook installation bundle and evaluate it, so that the real hook exists in that pause point’s browser instance

- Update that global hook with the real React renderer instance, as captured by the stub functions, to match how it would have been configured if the real DevTools had been part of the page originally

- Inject the full RDT “backend” bundle and evaluate it, so that the rest of the RDT logic is in the pause

- Take the arguments that React had passed to our stub callback functions, and forward those to the real RDT hook processing callbacks, so that the RDT backend logic does its normal walk through the component fiber tree and determines what’s changed in this commit

- Capture the final “operations” array, and serialize it back to the routine script

- Map the array of operations+timestamps, and turn those into annotations

- Write the annotations to our backend for persistence

Modifying the React DevTools Bundles

I started by cloning the React repo and following the steps to build the existing extension bundles. The two key files I needed were

installHooks.js and react_devtools_backend.js.From inspecting the code, I knew that I’d have to make some hand-edits to these bundles. For example, the normal setup process involved waiting for a signal to be sent from the extension UI, so I had to find that setup logic and modify it to run synchronously as the bundle was being evaluated.

I quickly discovered that any error thrown during the evaluation step caused the entire evaluation to fail, and that slapping a

try/catch around the entire code to be evaluated didn’t actually prevent that. Unfortunately, due to the combo of how Replay’s protocol API is structured, and the in-progress work for Chrome support, it was very hard to retrieve actual error messages or stack traces.I had to resort to some very hacky and manual techniques for debugging failures. I updated my evaluation code to add a

window.logMessage(text) function that just pushed messages into an array in the paused browser, and then retrieved the array and printed it locally at the end of my script. I then started inserting dozens of window.logMessage("Got to here!") lines, commented out code until I could see it getting to a certain spot, and then uncommented the next few lines until I’d see it crash and could narrow down the relevant lines.I eventually found that most of the errors were due to the RDT logic attempting to access

localStorage - apparently any use of localStorage in an evaluation throws an error. Fortunately, we don’t need any of that, so I just deleted those lines from the bundles.I also had to make changes to modify how the RDT logic generates IDs for

Fiber objects. Normally, the RDT internals generate a GUId the first time it sees a given Fiber instance. That won’t work in our case, because each pause is a distinct environment, and we wouldn’t get consistent IDs for the same actual Fiber instance across different pauses.Fortunately, Brian Hackett had been working on initial support for “persistent object IDs”, where our browser fork internals are modified to do its own tracking of specific object instances and create a unique ID for each of those. In this case, he hacked in support for persistent IDs just for objects that appear to be React

Fiber instances, and I updated the RDT logic to use those instead of generating GUIDs.After a few days of continual trial and error, I was able to successfully inject and evaluate the RDT bundles without any further errors being thrown, but I wasn’t correctly capturing the operations array data. I had to make some more edits to the stub functions in our Chromium fork to capture some additional critical object references, and tie those into the injected hook during the evaluation process.

Happily, this actually worked! I was eventually able to collect and serialize the operations array for a given pause point’s React commit, and then expand that to fetch the operations for all known commit timestamps in parallel.

I knew I’d eventually have to figure out how to get this logic deployed as part of our backend, but as a proof of concept, I copy-pasted the generated annotations array over into our client-side repo, commented out the lines that tried to fetch annotations from the database, and used the pasted annotations instead. Sure enough, the RDT UI logic just worked! I could see the correct components in the RDT tree view, and they changed over time as I jumped to different points in the recording.

The final core logic for fetching the operations data for a single timestamped point looks like this:

typescriptasync function fetchReactOperationsForPoint( evaluationArgs: EvaluationArgs, type: 'inject' | 'commit-fiber-root' | 'commit-fiber-unmount' ): Promise<number[][]> { // Delete the stub `REACT_DEVTOOLS_GLOBAL_HOOK` object from our browser fork, // so that we can install the real React DevTools instead. await evaluateNoArgsFunction(evaluationArgs, mutateWindowForSetup) // Evaluate the actual RDT hook installation file, so that this pause // has the initial RDT infrastructure available await evaluateNoArgsFunction(evaluationArgs, installHookWrapper) await evaluateNoArgsFunction(evaluationArgs, readRenderers) // When we install the rest of the RDT backend logic, it will emit an // event containing the operations array. Subscribe to that event // so that we can capture the array and retrieve it. await evaluateNoArgsFunction(evaluationArgs, subscribeToOperations) // Evaluate the actual RDT backend logic file, so that the rest of the // RDT logic is installed in this pause. await evaluateNoArgsFunction(evaluationArgs, reactDevToolsWrapper) // Our stub code saved references to the React renderers that were in the page. // Force-inject those into the RDT backend so that they're connected properly. await evaluateNoArgsFunction(evaluationArgs, injectExistingRenderers) // There's two primary React events that we care about: // - a "root commit", which adds or rearranges components in the tree // - an "unmount" event, which removes a component from the tree // In either case, we're paused in our stub wrapper function, with the right // function args in the current scope. // Call the real RDT hook object function with those arguments, which will // do the appropriate tree processing and emit an event with the operations array. if (type === 'commit-fiber-root') { await evaluateNoArgsFunction(evaluationArgs, forwardOnCommitFiberRoot) } else if (type === 'commit-fiber-unmount') { await evaluateNoArgsFunction(evaluationArgs, forwardOnCommitFiberUnmount) } // Serialize the operations array that we captured in a global `window` field. const operationsText = await evaluateNoArgsFunction( evaluationArgs, getOperations ) const { operations } = JSON.parse(operationsText) as { operations: number[][] } return operations }

Forking the React DevTools

All of these changes to the RDT bundles were done via hand-edits, but I knew that wasn’t how we wanted to maintain them long-term.

Once I had nailed down all the necessary changes to the original RDT bundles, I went back to the React repo fork, and found the relevant locations in the original source code. I created a new branch, applied matching changes to the original source, rebuilt the bundles with the changes, and confirmed that I could still run all the operation extraction steps with the freshly-built bundles. That way, we can easily maintain our modifications and integrate any future updates to the RDT source, by merging down from the main React repo.

I also noted that the RDT bundles were upwards of 500K apiece unminified. I briefly debated minifying them, and confirmed that would shrink them to around 125K, but decided to skip that step for now.

However, I did note that the bundles contained some chunks of code we really didn’t need in our case. There were some files related to UI components or styling constants, and code for that

localStorage persistence. Interestingly, one of the biggest pieces of the bundles seemed to be the entire NPM semver package, which took up a whopping 38K of each bundle. I checked the usages, and found a couple places where the RDT logic was doing checks like if (gte("17.0.2", version)). That seemed rather wasteful.I did some quick searching and found a 10-line

semvercmp function that just did string splitting and numerical checks. I removed the semver imports and used that instead, and was able to shrink the generated bundle size noticeably. (I plan on submitting those changes as an upstream patch to the React repo shortly.)The actual changes in our fork branch are fairly minimal, as seen in this diff:

React DevTools Client-Side Implementation

As soon as I tried clicking on a component node in the RDT tree to view its props, that request timed out and failed. After thinking about it, I realized that the RDT relies on having the real backend logic in the page so it can request component data on demand. Our paused browser instance in the cloud only had the stub functions, not the real RDT backend, since all that injection work had happened in a completely different session driven by the routine.

I debated my options, and decided to try replicating the same “inject the bundles” approach I’d tried with the routine. This time, our own app client would try sending the evaluation commands to the backend. The idea was that if I did that once per pause point, from there our existing wrapper for the RDT UI components would work the same way no matter whether we were looking at an FF recording (with the RDT bundle already in the browser) or a Chromium recording (with the bundle freshly injected on demand).

I was able to mostly copy-paste the modified bundles and the evaluation functions from the routine code over into our app client codebase. And sure enough: after a bit more experimentation, this injection technique actually worked! I was able to click on component nodes and see the props show up in the RDT UI panel, as well as having our “highlight DOM node layout in page preview” feature work again.

Deployment

The last step was to get this actually running as part of our backend. I’d looked at bits of the backend repo, and the routines files lived there, but I’d never tried to get it running for real. A couple of folks from our backend team helped walk me through the setup steps for doing daily dev work on the backend repo.

From there, we tried running the routines in local dev for the first time (as opposed to the standalone run script I’d been using). This took another couple hours, including figuring out some timing issues with when the routine logic was getting kicked off during a session… but we got it working! I was able to load that recording made with the updated Chromium build, confirm that the backend had launched the RDT processing routine, and see the “React” tab show up in our UI with the actual component data.

Here’s a link to the actual public recording of the React counter app I used to test the routine. You can click on the “React” tab, jump to different points in the recording timeline, and see the component tree change to match what’s in the page:

Final Thoughts

This was an extremely exciting project to work on! I’ve always enjoyed taking two pieces of software that were never meant to work together, and finding ways to smash them up and do something new and interesting (a whole lot of hammering square pegs into round holes!). In this case, we had no guarantee that any of this approach would actually be feasible and work the way we wanted. The React DevTools backend was only designed to run in a real browser, and I’m pretty sure our protocol API wasn’t designed with “evaluate entire JS bundles” in mind. Happily, both were designed well enough that something like this was possible. Along the way, I learned a lot about our backend protocol API, how pauses work, evaluating JS as strings, and doing development + deployment on our backend repo.

Currently, the repo containing the actual routines scaffolding is still internal, but I’ve included the final React DevTools routine implementation below for reference.

Long-term, we’d like to try making the routines functionality public, and we’d love to work with other framework/library authors to begin adding devtools/debugging support for other frameworks beyond React - please reach out to us and we’d be happy to talk!

Next up on our list is support for the Redux DevTools, which should also benefit other state libraries like NgRx and Jotai. I’m excited to tackle this and see how we can leverage analysis routines to solve a different set of problems, and make this a reality!

Pull Requests

Here’s the public PRs that I filed as part of this work:

- Client-side RDT updates: https://github.com/replayio/devtools/pull/8397

- Chromium stub functions: https://github.com/replayio/chromium/pull/293

- React DevTools fork: https://github.com/replayio/react/compare/main…replayio:react:feature/replay-react-devtools

Final React DevTools Routine Implementation

typescript/* Copyright 2022 Record Replay Inc. */ // Routine to run the react devtools backend in a recording and generate its operations arrays. import { Annotation, ExecutionPoint } from '@replayio/protocol' import { pointStringCompare } from '../../../shared/point' import { ArrayMap } from '../../../shared/array-map' import { assert } from '../../../shared/assert' import { Context } from '../../../shared/context' import { Routine, RoutineSpec } from '../routine' const REACT_HOOK_KEY = '__REACT_DEVTOOLS_GLOBAL_HOOK__' // These are plain JS files that are being imported at build time, // so that we can stringify the JS functions and send them to be // evaluated at runtime. We don't currently have a good way to // include these files as assets in a backend deployment otherwise. // @ts-ignore import { reactDevToolsWrapper } from './assets/react_devtools_backend' // @ts-ignore import { installHookWrapper } from './assets/installHook' async function evaluateInTopFrame( routine: Routine, point: ExecutionPoint, expression: string, cx: Context ): Promise<string> { const pause = await routine.ensurePause(point, cx) const frames = await pause.getFrames() assert(frames && frames.length, 'No frames for evaluation') const topFrameId = frames[0].frameId const rv = await pause.evaluate(topFrameId, expression, cx) return rv?.value ?? 'null' } declare global { interface Window { testValue: number [REACT_HOOK_KEY]: any __REACT_DEVTOOLS_SAVED_RENDERERS__: any[] savedOperations: number[][] evaluationLogs: string[] logMessage: (message: string) => void } } // EVALUATED REMOTE FUNCTIONS: // Evaluated in the pause to do initial setup function mutateWindowForSetup() { // Makeshift debug logging for code being evaluated in the pause. // Call `window.logMessage("abc") into the RDT bundles, and // then we retrieve this array at the end to see what happened. window.evaluationLogs = [] window.logMessage = function (message) { window.evaluationLogs.push(message) } // Save the emitted RDT operations from this pause window.savedOperations = [] // Delete the stub hook from our browser fork delete window.__REACT_DEVTOOLS_GLOBAL_HOOK__ } // function readWindow() { // return JSON.stringify(window.__REACT_DEVTOOLS_GLOBAL_HOOK__); // } function readRenderers() { return JSON.stringify({ renderers: window.__REACT_DEVTOOLS_SAVED_RENDERERS__ }) } // function getMessages() { // return JSON.stringify({ messages: window.evaluationLogs }); // } function getOperations() { return JSON.stringify({ operations: window.savedOperations }) } // Evaluated in the pause to connect the saved renderer instances with the RDT backend function injectExistingRenderers() { window.__REACT_DEVTOOLS_SAVED_RENDERERS__.forEach(renderer => { window.__REACT_DEVTOOLS_GLOBAL_HOOK__.inject(renderer) }) } // Evaluated in the pause to save emitted operations arrays function subscribeToOperations() { // @ts-ignore window.__REACT_DEVTOOLS_GLOBAL_HOOK__.sub('operations', newOperations => { window.savedOperations.push(newOperations) }) } // Evaluated in the pause to force the RDT backend to evaluate an unmount function forwardOnCommitFiberUnmount() { const renderer = window.__REACT_DEVTOOLS_GLOBAL_HOOK__.rendererInterfaces.get( // @ts-ignore rendererID ) // @ts-ignore window.__REACT_DEVTOOLS_GLOBAL_HOOK__.onCommitFiberUnmount( // @ts-ignore rendererID, // @ts-ignore fiber ) renderer.flushPendingEvents() } // Evaluated in the pause to force the RDT backend to evaluate a commit function forwardOnCommitFiberRoot() { // @ts-ignore window.__REACT_DEVTOOLS_GLOBAL_HOOK__.onCommitFiberRoot( // @ts-ignore rendererID, // @ts-ignore root, // @ts-ignore priorityLevel ) } interface EvaluationArgs { routine: Routine point: ExecutionPoint cx: Context } // Take a function, stringify it, evaluate it in the pause, and return the result async function evaluateNoArgsFunction( evaluationArgs: EvaluationArgs, fn: () => any ) { const { routine, point, cx } = evaluationArgs return evaluateInTopFrame(routine, point, `(${fn})()`, cx) } interface OperationsInfo { point: ExecutionPoint time: number operations: number[] } // Maximum number of points in the recording we will gather operations from. // const MaxOperations = 500; async function fetchReactOperationsForPoint( evaluationArgs: EvaluationArgs, type: 'inject' | 'commit-fiber-root' | 'commit-fiber-unmount' ): Promise<number[][]> { // Delete the stub `REACT_DEVTOOLS_GLOBAL_HOOK` object from our browser fork, // so that we can install the real React DevTools instead. await evaluateNoArgsFunction(evaluationArgs, mutateWindowForSetup) // Evaluate the actual RDT hook installation file, so that this pause // has the initial RDT infrastructure available await evaluateNoArgsFunction(evaluationArgs, installHookWrapper) await evaluateNoArgsFunction(evaluationArgs, readRenderers) // When we install the rest of the RDT backend logic, it will emit an // event containing the operations array. Subscribe to that event // so that we can capture the array and retrieve it. await evaluateNoArgsFunction(evaluationArgs, subscribeToOperations) // Evaluate the actual RDT backend logic file, so that the rest of the // RDT logic is installed in this pause. await evaluateNoArgsFunction(evaluationArgs, reactDevToolsWrapper) // Our stub code saved references to the React renderers that were in the page. // Force-inject those into the RDT backend so that they're connected properly. await evaluateNoArgsFunction(evaluationArgs, injectExistingRenderers) // There's two primary React events that we care about: // - a "root commit", which adds or rearranges components in the tree // - an "unmount" event, which removes a component from the tree // In either case, we're paused in our stub wrapper function, with the right // function args in the current scope. // Call the real RDT hook object function with those arguments, which will // do the appropriate tree processing and emit an event with the operations array. if (type === 'commit-fiber-root') { await evaluateNoArgsFunction(evaluationArgs, forwardOnCommitFiberRoot) } else if (type === 'commit-fiber-unmount') { await evaluateNoArgsFunction(evaluationArgs, forwardOnCommitFiberUnmount) } // Serialize the operations array that we captured in a global `window` field. const operationsText = await evaluateNoArgsFunction( evaluationArgs, getOperations ) const { operations } = JSON.parse(operationsText) as { operations: number[][] } return operations } async function runReactDevtoolsRoutine(routine: Routine, cx: Context) { const hookAnnotations: ArrayMap<string, Annotation> = new ArrayMap() const annotations = await routine.getAnnotations('react-devtools-hook', cx) annotations.forEach(annotation => { const { message } = JSON.parse(annotation.contents) hookAnnotations.add(message, annotation) }) const fiberCommits = hookAnnotations.map.get('commit-fiber-root') || [] const fiberUnmounts = hookAnnotations.map.get('commit-fiber-unmount') || [] const commitsWithUpdates = fiberCommits.concat(fiberUnmounts) commitsWithUpdates.sort((a, b) => pointStringCompare(a.point, b.point)) // TODO Batch these into groups of 25 or so? Also apply MAX_OPERATIONS? const allPointResults: OperationsInfo[] = await Promise.all( commitsWithUpdates.map(async commitAnnotation => { const { point, time, contents } = commitAnnotation const { message } = JSON.parse(contents) const evaluationArgs: EvaluationArgs = { routine, point, cx } const operations = await fetchReactOperationsForPoint( evaluationArgs, message ) return { point, time, operations: operations[0] } }) ) const allOperations = allPointResults.filter(p => !!p.operations) // TODO Do we care about MaxOperations? We already did the processing work to fetch them. // if (allOperations.length > MaxOperations) { // allOperations.length = MaxOperations; // } // Unmounts will not know about the current root ID. Normally the backend will // set this when flushing the unmount operations during the next onCommitFiberRoot // call, but we can fill in the roots ourselves by looking for the next commit. for (let i = 0; i < allOperations.length; i++) { const { operations } = allOperations[i] if (operations[1] === null) { for (let j = i + 1; j < allOperations.length; j++) { const { operations: laterOperations } = allOperations[j] if ( laterOperations[1] !== null && operations[0] === laterOperations[0] ) { operations[1] = laterOperations[1] break } } } } const finalCalculatedAnnotations: Annotation[] = [] for (const { point, time, operations } of allOperations) { // Ignore operations which don't include any changes. if (operations.length <= 3) { continue } const annotation: Annotation = { point, time, kind: 'react-devtools-bridge', contents: JSON.stringify({ event: 'operations', payload: operations }) } finalCalculatedAnnotations.push(annotation) cx.logger.debug('BackendOperations', { point, time, operations }) routine.addAnnotation(annotation) } } export const ReactDevtoolsRoutine: RoutineSpec = { name: 'ReactDevtools', version: 2, runRoutine: runReactDevtoolsRoutine, shouldRun: ({ runtime }) => runtime == 'chromium' }