Rerecording is a technique we’ve developed to streamline the process of testing patches.

Replay already eliminates the need for reproduction steps when understanding a bug: just file an issue with a link to the recording, and that recording can be debugged by a developer as if they were sitting right there when the problem happened. However, when they develop a fix there isn’t an easy way to see if that fix actually works and addresses the reported problem. This is where rerecording comes in.

Rerecording takes an initial recording along with an updated version of the app we want to test, and generates a new recording of the updated app which performs the same interactions that happened in the initial recording. This makes it easy to see the effect of the update: if the problem has been fixed it can be seen in the new recording’s video and screenshots, but if there is more work to do the new recording can be debugged in the same way as the initial one.

We’ve packaged rerecording into a GitHub action that watches for PRs linked to issues that were reported using a recording. When these PRs are created or updated and there is a preview deployment (e.g. from Vercel) for the new version of the app, the action rerecords the app. Rerecording happens with the new frontend deployment but doesn’t interact with the backend – it mocks all network requests using data from the original recording. Rerecording doesn’t work 100% of the time, and will fail if for example the updated app makes different network requests or makes major changes to how the page is laid out so that the original interactions can’t be performed again.

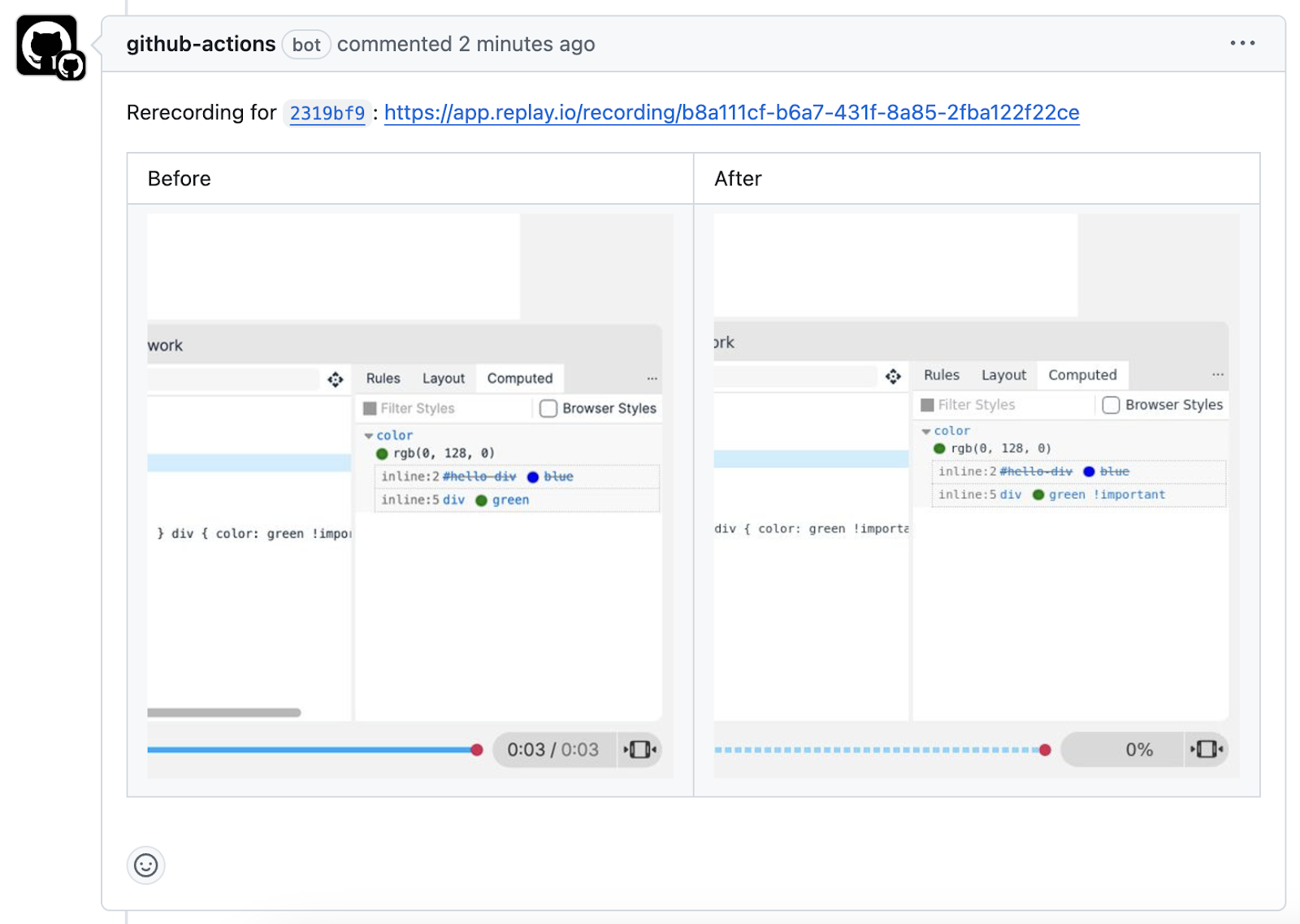

When rerecording finishes, the action compares the new recording with the original to show the effects of the PR. For any comment in the original recording the action looks at the corresponding point in the new recording and generates before/after screenshots. If the after screenshot is acceptable, the new recording doesn’t need to be opened at all.

Below is the result of the action when tested on the same improvement to Replay’s devtools which we looked at in a recent blog post. The issue is that important CSS rules aren’t marked as such in the computed styles for an element, and we can clearly see from the screenshots that the PR is a suitable fix.

This recording and screenshots gives a reviewer everything they need to understand the effects of the PR. No one has to reproduce the issue by hand to generate these screenshots or learn how the PR works. The developer can also use rerecording to test changes easily for iterating on the PR, provided they can wait a couple minutes for the new recording to be generated.

These are useful improvements right now, but we’re especially excited about further streamlining which rerecording enables:

- Autonomous AI developers need an easy way to test their patches. Similar to what we’ve seen with the difficulties that AI developers have navigating larger code bases, AIs have difficulty following reproduction steps (in addition to the challenges with using a web browser). Rerecording enables a feedback loop where the developer can continue tuning its patch until it behaves as expected.

- Data needed for rerecording like network payloads, user interactions and so on can be collected by tooling separate from the Replay browser like a browser extension. In most cases, doing an initial recording of this lower fidelity data and rerecording with the Replay browser on a remote machine will still reproduce the problem the user was seeing, without having to separately download and configure the Replay browser.

The GitHub action for rerecording is free to use but in its early days. Let us know if you’d like to try it out to develop/review PRs more easily. Fill out our contact form or email hi@replay.io and we’ll be in touch.