Build Real Apps in One Hour

Last week, we organized our first-ever vibe coding contest. We invited contestants to bring their favorite AI-assisted development tool and compete for a cash prize, with just one hour on the clock to build something real. The contestants didn't know what to expect. And neither did we.

The main driving force behind organizing this event was to answer some pressing questions that have been on our minds. Where does vibe-coding actually stand right now? What can these tools genuinely deliver? And perhaps more importantly, where do they still hit a wall?

On October 27th, we decided to get these answers in the most authentic way possible—by watching contestants compete live and build real applications using vibe-coding tools under real-world time constraints.

The motivation behind the Contest

Vibe-coding seems to be everywhere these days. Every week, there's a new tool, a new feature, or a fresh take on the concept. There's certainly a lot of hype surrounding these tools, but beneath all the noise, there's something genuinely significant happening: a fundamental shift in how we approach prototyping and building applications.

As a company that creates its own vibe-coding platform, we felt it was important to step back and honestly assess the state of the art. This wasn't just about benchmarking ourselves against competitors - it was about truly understanding the genuine capabilities and limitations of these tools in the hands of skilled practitioners.

We also wanted to approach vibe-coding as a competitive sport of sorts, to see how much user skill actually matters. Can someone with years of development experience build something fundamentally better than someone brand new to these tools? The contest wasn't just about crowning winners; it was about creating a transparent space where contestants could demonstrate exactly what these tools can and can't do in real time. That insight, we believed, would be invaluable for everyone in the field.

The Contest Setup

We decided to livestream the entire event, making it open and accessible to anyone interested in following along. After introducing the contestants and judges, we announced the assignment: build a calendar booking application, similar to services like Calendly or cal.com.

The task was deliberately ambitious. We didn't want a simple prototype - we wanted to see how far contestants could push their tools. The app needed a logged-in state for administrators to configure their available time slots. It needed the ability to add events to a calendar, manage bookings, and provide an intuitive experience for end users navigating the booking flow. In short, we wanted to see a fully functional experience built in just sixty minutes.

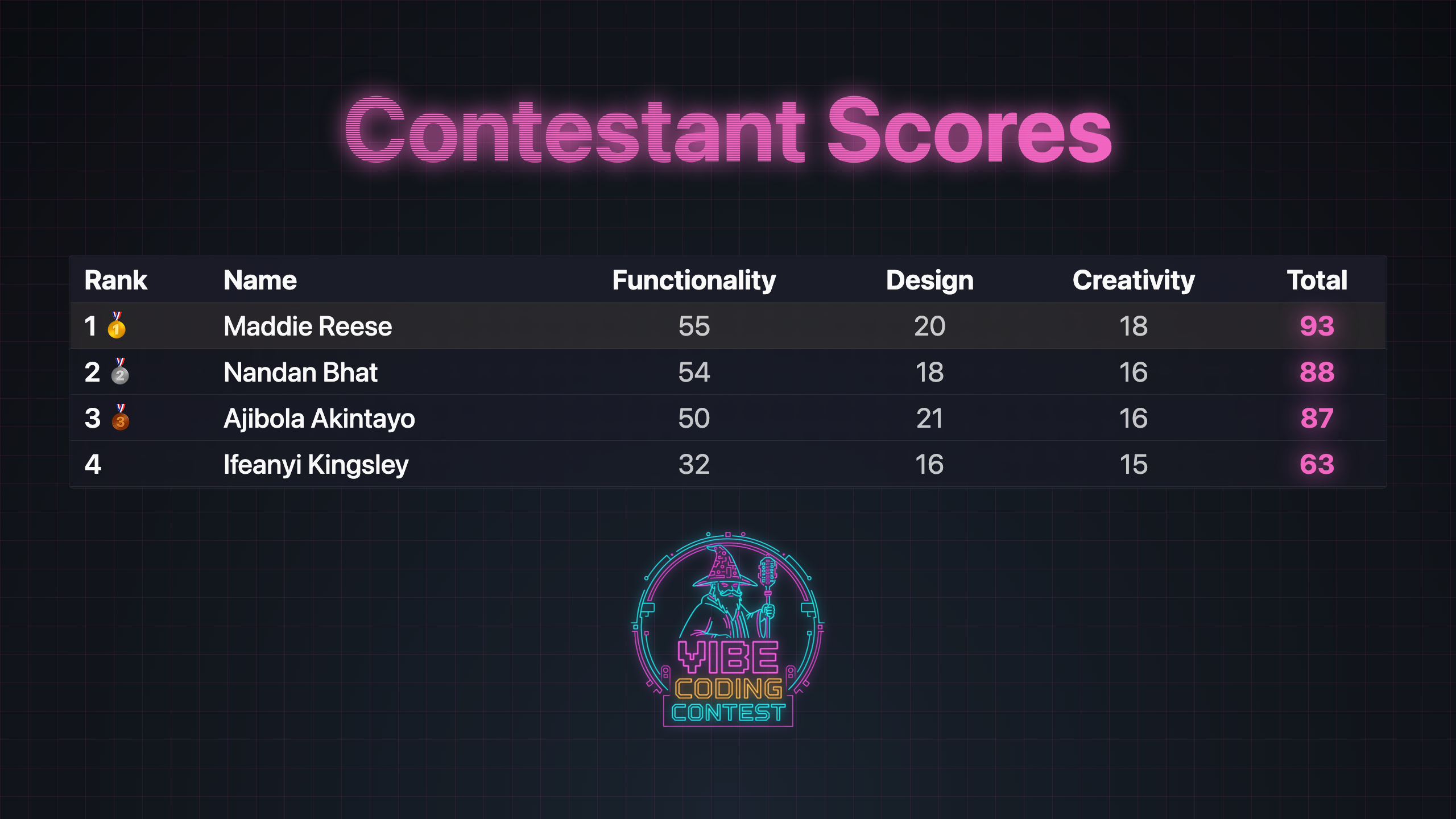

Four contestants joined us, with two using Lovable and two using Replit—both popular vibe-coding platforms. All of them delivered impressive work and scored remarkably high on the functionality scale. Maddie Reese ultimately took first place, though our judges noted they had an unusually difficult time deciding between the entries because the quality was consistently strong across the board.

The judges evaluated each app on three criteria. Functionality was worth up to 20 points, with points deducted for bugs discovered during testing. Design and user experience could earn up to 10 points, while creativity—rewarding originality and unexpected problem-solving—could also earn up to 10 points.

What the tools actually delivered

What immediately stood out was that the applications built in under one hour were substantially better than what a single developer could typically produce in the same timeframe working traditionally. The quality and speed of development was genuinely impressive.

What's perhaps most striking is how the tools handled the debugging process. Contestants would describe errors or unexpected behavior, and the AI would intelligently suggest fixes, often catching issues that weren't immediately obvious. We watched in real time as problems that might have taken a developer thirty minutes to debug were resolved in two or three back-and-forths with the AI.

However, we also saw where the limitations emerged. Complex business logic sometimes required multiple iterations to get right. A few contestants ran into issues with state management when the application needed to track multiple pieces of user data across different screens. A good example of that was a missing time-blocking for a slot that was booked a couple of seconds ago. These types of details usually require more than just a good vibe.

This is one of the problems that we are trying to solve with nut.new. The Replay.io debugger runs in the background and provides this information to the A.I. agent automatically. This creates a feedback loop and a true hands-off experience for the users. But it’s not just about not having to paste in the error messages to the chat input. As users build more and more apps, the whole system is incrementally improving, building a whole database of solutions that can be reused as building blocks for future app creations.

The Bigger Picture

What's happening here is significant. We're witnessing a moment where the tools have matured enough to be genuinely useful for rapid prototyping and MVP development, yet still require human judgment and expertise to reach production quality. The apps created weren't just demos—they were functional applications that could theoretically go live with minimal additional work.

The current state of vibe-coding tools suggests we're still some distance from fully automated app creation, but we're also further along than many people realize. The rate of progress has been remarkable, and it's changing what we should expect from development velocity.

What’s next

This contest was genuinely exciting for us, but it's really just one chapter in a much longer story about how software gets built. We're still in the early stages of truly understanding the capabilities of these tools, and we believe public competitions and learning spaces like this are crucial for pushing the entire field forward.

The insights we gathered from watching contestants work in real time will inform our own product development and help us better understand where to focus our efforts. If you missed the livestream, we have recordings available. If you're interested in participating in future contests or collaborating on research around vibe-coding, we'd genuinely love to hear from you. The best ideas come from people who are actively using these tools, discovering both their potential and their boundaries every day.