Have you ever had a client service stop working because some API’s data format changed? Problems like this are a common annoyance when developing software, and without rigorous testing will cause outages in production. Using Replay with OpenHands, these issues can be fixed within a few minutes of detection, completely automatically.

When tests start failing on a PR in development, get a commit with a fix instead of investigating. When synthetic monitoring fails in production, get a new PR with a fix and an unbelievably fast time to repair.

No one should have to figure out what went wrong.

This works simply and effectively due to the complementary power of these two technologies. OpenHands is an AI developer, and like other AI developers it is great at making straightforward changes like updating a repository to handle new data formats. It needs to know the new data format, however, and can't figure that out on its own. With Replay, the failing application is recorded, capturing the new data and all the problems the application runs into when handling it. This info is passed to OpenHands to give it the context needed to generate the right fix.

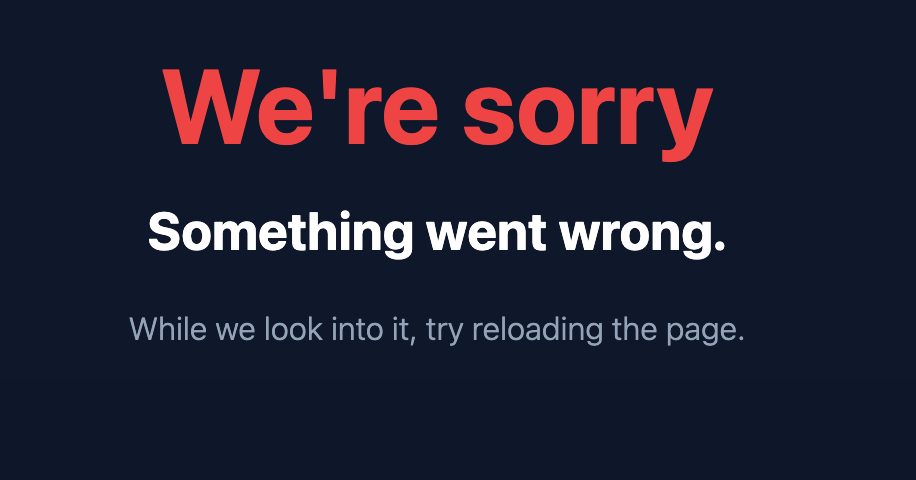

Let's see how this works on an example of this sort of problem which we ran into ourselves. We're working on a new UI for automatically identifying and explaining performance regressions in web applications (more on that soon). We built an initial version of the UI, then made some backend changes over the course of a couple weeks. When we returned to the UI we got the dispiriting error shown above.

After the UI fetches the performance data it needs, the exception below is thrown from a React component while rendering the data.

plain textTypeError: Cannot read properties of undefined (reading 'map') at f (PerformanceMockup.tsx:25:27) at ak (react-dom.production.min.js:167:135) at i (react-dom.production.min.js:290:335) at oD (react-dom.production.min.js:280:383) at react-dom.production.min.js:280:319 at oO (react-dom.production.min.js:280:319) at oE (react-dom.production.min.js:271:86) at oP (react-dom.production.min.js:273:286) at r8 (react-dom.production.min.js:127:100) at react-dom.production.min.js:267:262

Here is the relevant code in PerformanceMockup.tsx:

plain text<div className="m-4 overflow-y-auto"> {result.summaries.map((summary, index) => { const props = { summary }; return <OriginDisplay key={index} {...props}></OriginDisplay>; })} </div>

For us humans, fixing this isn't very hard. We can reproduce the error and use Chrome's devtools to inspect the contents of the

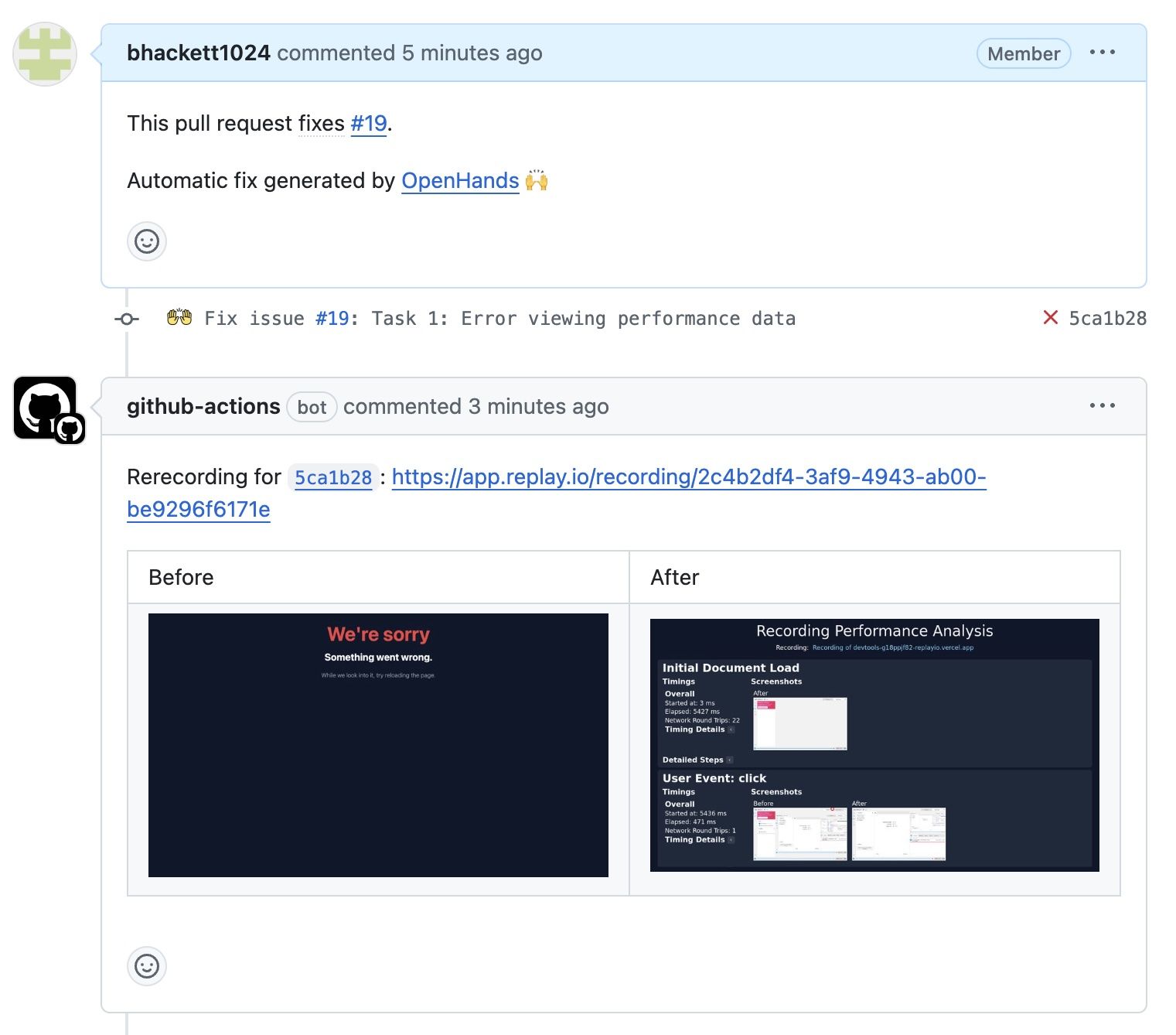

result object being accessed, either using some console logs or by pausing when the exception is thrown to inspect the state. It now has an analysisResult property which contains the summaries, so we need to replace result.summaries with result.analysisResult.summaries.We don't want to fix this by hand, however. An AI developer should be able to do this instead. We tried OpenHands and Copilot Workspace on this, to understand what they need to produce the right fix. Showing them the error and a hint that the problem may be a data format change leads to a wrong patch, as the developer will find a way to prevent the error (like changing

summaries.map to summaries?.map) but the result won't be rendered correctly. Additionally describing the contents of the result object (either the literal values, or a JSON schema) gives both developers enough to produce a good patch.Using Replay, we can automatically describe the contents of the

result object for OpenHands. With a recording of the page error and no further guidance, some automated analysis finds the failed React render, the point where the associated error was thrown, and then describes the contents of the variables in scope at that point. In the resulting PR simulation is used to generate a new recording showing the problem is fixed, and we can merge the PR without further investigation.

This approach will generalize well to a wide variety of sudden application breakage, especially when historical recordings from before the breakage are available to compare against. If you'd like to use our Replay+OpenHands integration to automatically fix tasks like this, we'd love to hear from you. Email us at hi@replay.io or fill out our contact form and we'll be in touch.