2024 has been an incredible year for AI Developers. At the start of the year, agents were able to complete 3% of SWE Bench. Today, agents are able to complete over 50% of SWE Verified and it’s likely we’ll see scores of 70-90% next year as we begin to saturate the benchmark.

The question is no longer “can AI developers help in real world environments”, but “how do we increase the percent of successful tasks”. Put another way, now that agents have gotten better at writing code, how do we help them get better at QAing and debugging their work.

We’re obviously excited about this development because we’ve always believed that understanding how code works and why it fails is 90% of the battle.

This post is the story of how we’re giving AI devs the tools they need to be successful and the incredible results we’re beginning to see as a result. The story starts with fixing failing browser tests, but progresses towards a general purpose AI dev.

Background

In July we pivoted from focusing on building dev tools for devs to building dev tools for AI agents. The joke internally was that it’d be easier to teach agents how to fix a flaky test than it would be to get devs to care about fixing flaky tests.

After all, we’d already proven that once you’d recorded a passing and failing Cypress or Playwright test, you had all of the context needed to find the divergence, you just had to look for it!

In September, we showed how an AI agent could fix playwright tests in minutes. This was a double win because the test gets fixed and honestly, nobody enjoys hunting down these silly bugs.

Replay Simulation

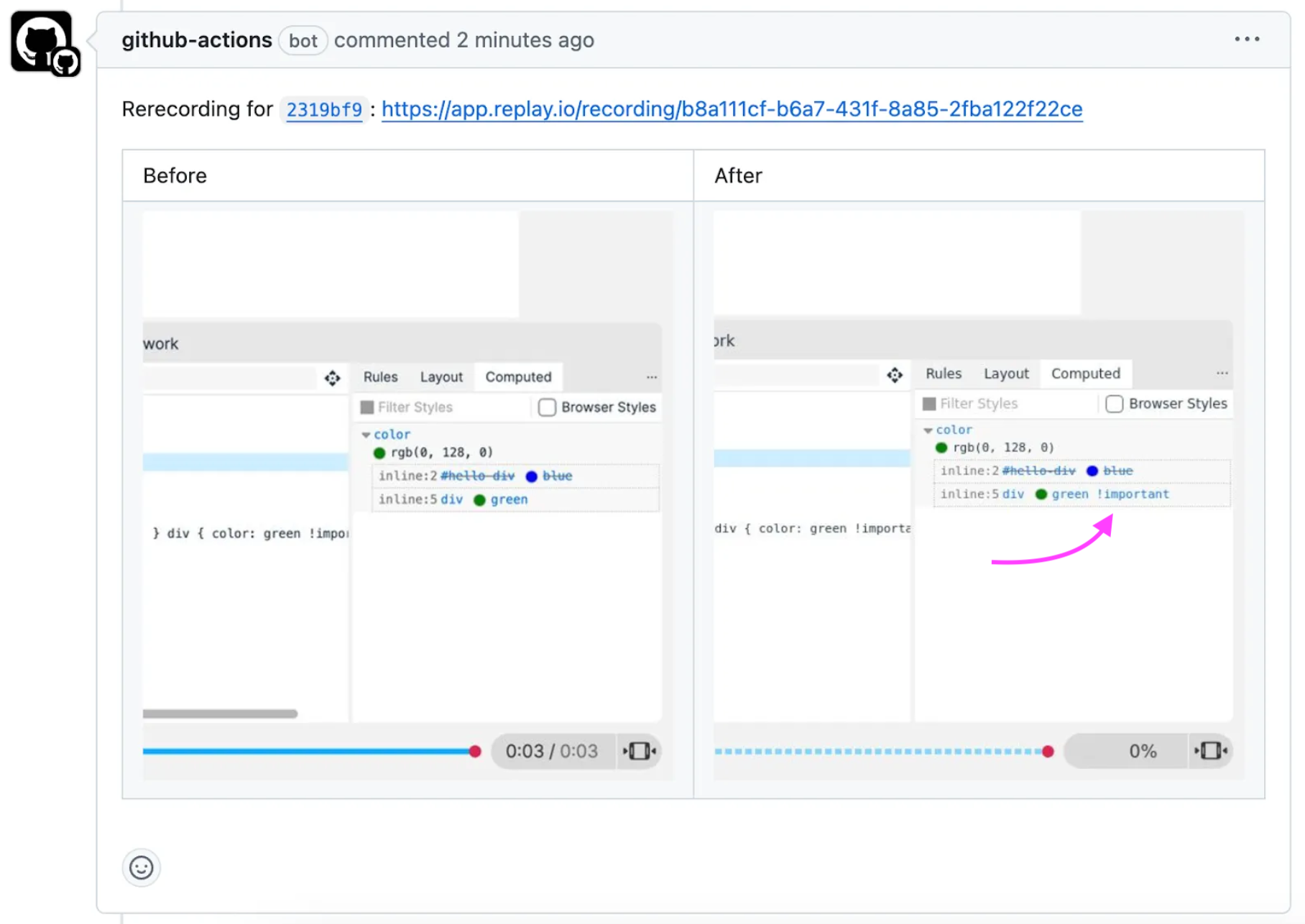

After we showed that we could find the problem, the next question was whether we could validate that it was correct. The challenge with flaky tests is that they happen infrequently and almost never happen on your laptop.

This was the basis of Replay Simulation. We’d spent the past four years building a deterministic browser which could record and replay the session in the cloud exactly as it had happened before, but we’d never attempted to run it again with modified code!

Here’s what the ideal flow would look like

- Record the failing test

- Localize the problem

- Introduce a fix

- Re-run the test with the identical inputs

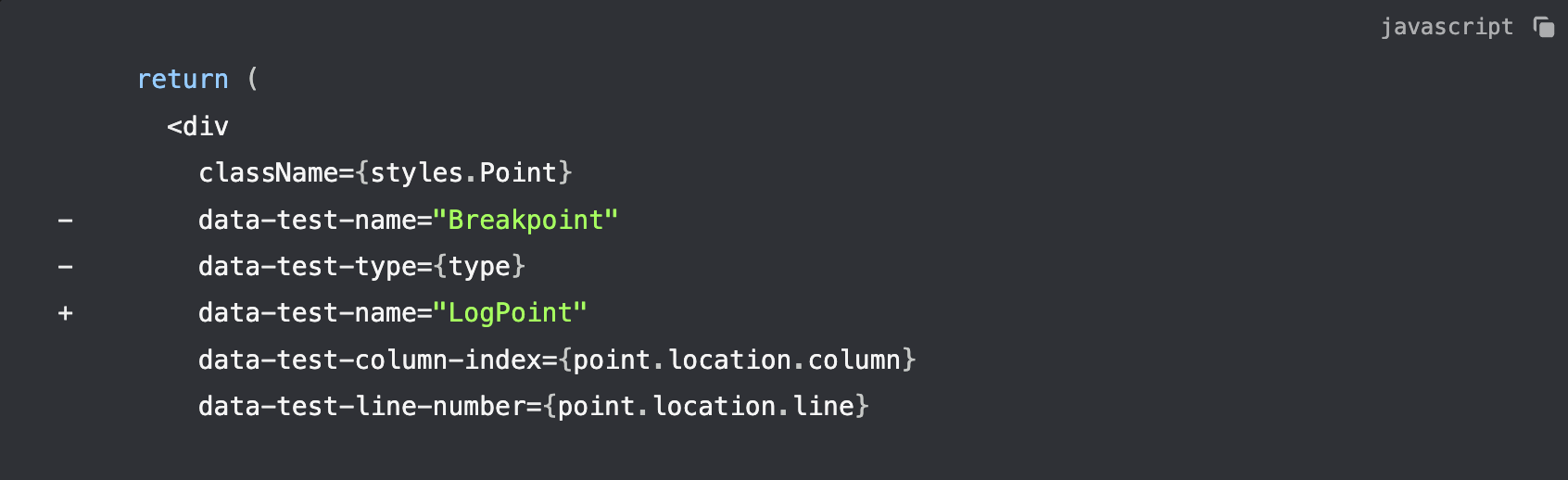

Before October, we were able to do steps 1-3 pretty well, but we’ve always known that step 4 would be the perfect closed loop for testing our hypothesis. We’re calling this feature Replay Simulation. We shipped it in November and it is everything we hoped it would be.

Replay Flow

Replay Simulation is the first foundational feature that an AI Developer needs in the debugging loop. The second feature is Replay Flow.

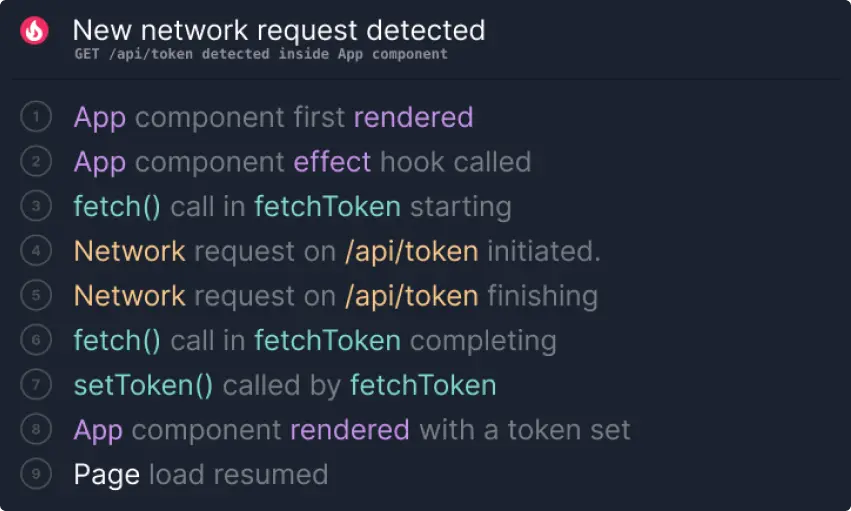

If you think about the tasks that developers perform when debugging a hard problem, it’s a lot of console logging, code searching, long walks, and rubber ducking. With Replay Flow we are able to instrument the runtime and make the data available to the agent through tool calling.

Replay Flow has dramatically simplified the debugging process. At a high level, if the agent used to take ten code search and console log actions to answer a question like “how did the data reach component B”, it can now get the answer with a single query. Replacing ten steps that each have a 90% of succeeding with one step is a huge reliability improvement.

Generalizing

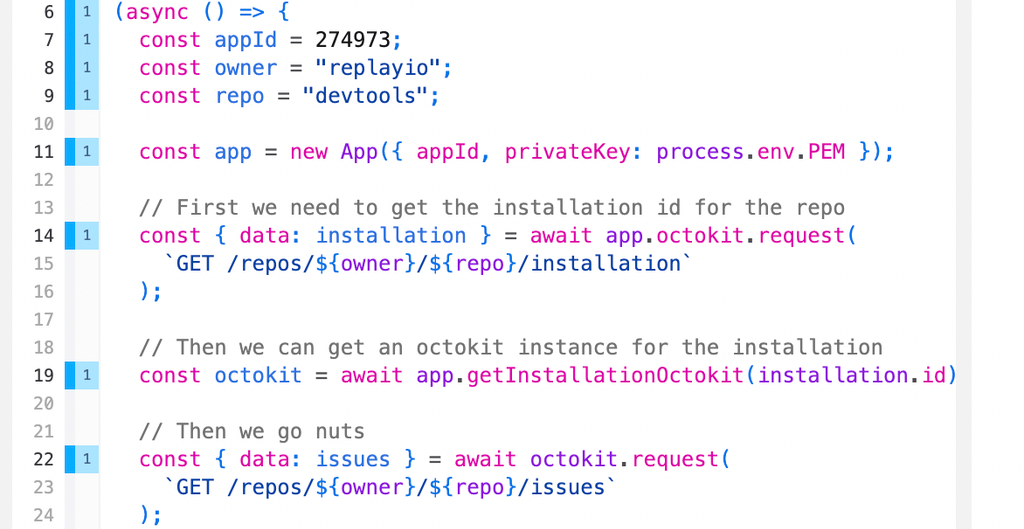

With Replay Simulation and Replay Flow we’ve been able to generalize our approach beyond fixing failing tests towards fixing arbitrary bugs and implementing new features. We’ve integrated Replay with Open Hands, an open source, AI developer and provided several tools for it to interact with. The only two things you need to provide to get started are a description of what you want to achieve and a recording of your app.

It’s still early days, but we’ve been excited to see how often the agent is able to successfully complete tasks that it previously could not!

If you’re building an AI developer, we’d love to hear how you’re improving your debugging loop and if Replay might be a good fit. Feel free to reach out to hi@replay.io. And if you’d like to start using Replay, feel free to checkout Changelog 71 for the next steps, and reach out to us at hi@replay.io.