Bolt.new and similar tools like Lovable, V0, and Replit all make an enticing offer: describe what you want to build, and the tool uses an AI developer to build it for you. Continue refining by describing the changes you want, and the AI makes those changes. We believe this shows the future of how most software will be developed. The democratizing impact this has is profound: anyone can build an application just by talking to an AI.

This future isn’t here yet, though. These tools are amazing at getting a mostly working prototype up and running fast, but then you hit a wall. You ask the AI to make a change, but it’s unable to do it or introduces new bugs – one step forward, two steps back. This recent blog post from Addy Osmani describes the pattern: the AI gets you 70% of what you want from the prototype but no more. Then you have to start hacking on the code yourself.

What stands in the way of a better Bolt that lets you create complete functioning prototypes and even production grade software entirely by talking to an AI? Better code writing LLMs will certainly help, but there is an independent and maybe more important concern of giving the right context to the LLM.

These tools work by feeding the project’s source code and user’s prompt to an LLM and asking it for the changes to make. This isn’t how people build software, however. People use developer tools to get the context they need to understand what is going wrong and figure out the exact fix needed. This context – whether it comes from console logs, the debugger, network monitoring, and so on – describes the runtime behavior of the application, and is unavailable to the LLM.

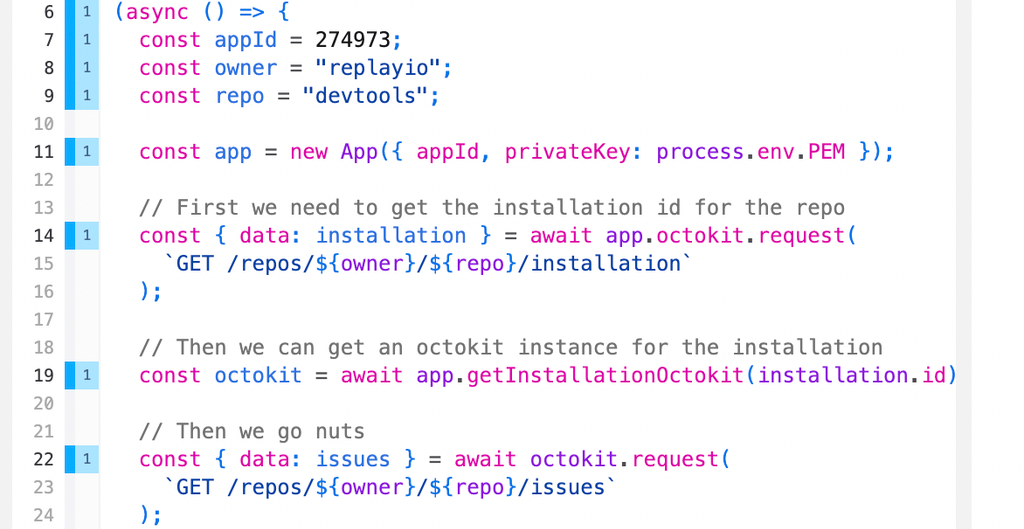

In recent months we’ve explored ways in which supplying even just a little bit of runtime data can make or break an LLM’s ability to accomplish a task: [1] [2] [3] [4]. Bolt needs this runtime data to advance beyond the 70% barrier, but fortunately it’s easy to supply this data using Replay. When you’re browsing the app in Bolt we can’t see details about what it’s doing, but we can capture some information – the network requests made, user interactions, and so on – and use simulation to create a Replay recording of the app with all the exact details needed.

We’re working on a modified Bolt (which is open source, thanks StackBlitz!) that uses Replay to get runtime data and swaps out its AI developer for OpenHands, which we’ve integrated with Replay and is powerful, flexible, and efficient at working on larger code bases. With this we can start breaking down the 70% barrier and help usher in the future of faster, easier, and incredibly accessible software development.

If you’ve used Bolt or similar tools and have hit obstacles you want removed, we’d love to hear from you! Reach us at hi@replay.io or fill out our contact form and we’ll be in touch.